High-intent search has moved upstream. AI systems resolve most of it without sending traffic anywhere and the share of these answer-only sessions keeps compounding.

Google admits AI Overviews appear in billions of results; Similarweb shows LLM referrals as a sliver of traffic, even as AI-style query sessions surge; and Perplexity and ChatGPT cohorts increasingly complete decisions in-flow. The spreadsheet says “not material.” The market structure says “material already happened.” CEOs who manage to the dashboard will be late by design.

This piece argues that brands optimising for yesterday’s visibility layer are surrendering position in the layer that is actively setting tomorrow’s defaults. The absence of traffic is the signature of a platform transition in flight. Transitions have clocks: retrieval hierarchies harden, partnership rosters lock, and new habits congeal on 45–90 day cycles. Optionality decays exponentially.

The framework: value chains and defaults

Let’s start with value chains: who captures the margin as technology shifts? With search, value concentrated in ad marketplaces; publishers traded content for traffic; brands bought attention and built mental availability (the ease of coming to mind) through reach and distinctive assets. With LLMs, value concentrates one layer up, at the recommendation interface that converts questions into answers. The consumer’s “unit of work” is no longer clicking and comparing; it is accepting a default shortlist. The marginal click is worth less because the marginal answer is worth more.

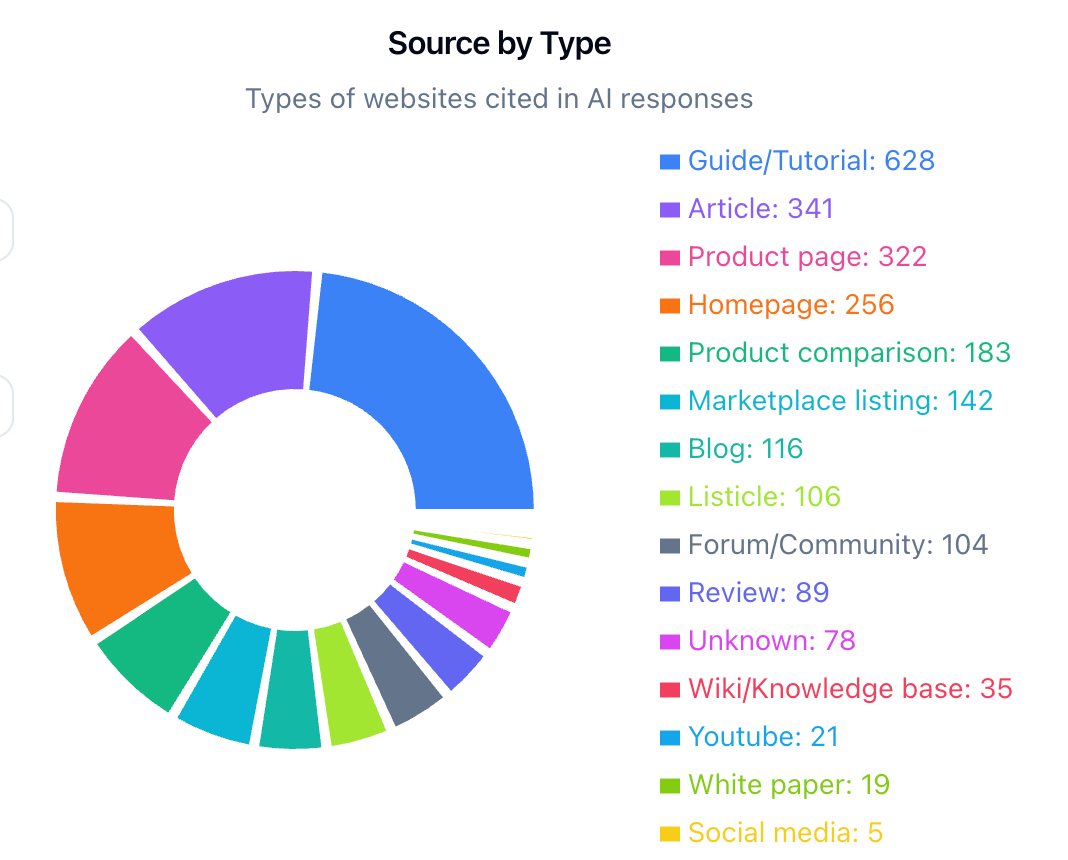

It would be a risk to think of answer engines as neutral pipes. They are retrieval stacks with preferences. The stack privileges (1) partners with APIs or data rights; (2) structured, citation-ready content; (3) fast, high-authority indices; and only then (4) the general web. That ranking of inputs is the new ranking of brands.

In this world, traffic becomes a dividend. The asset is algorithmic presence. If you optimise for dividends while your competitor optimises for assets, you might still book near-term sessions, but you will wake up priced out of the default set.

Why waiting re-prices your cost of entry

There are three compounding mechanisms at work:

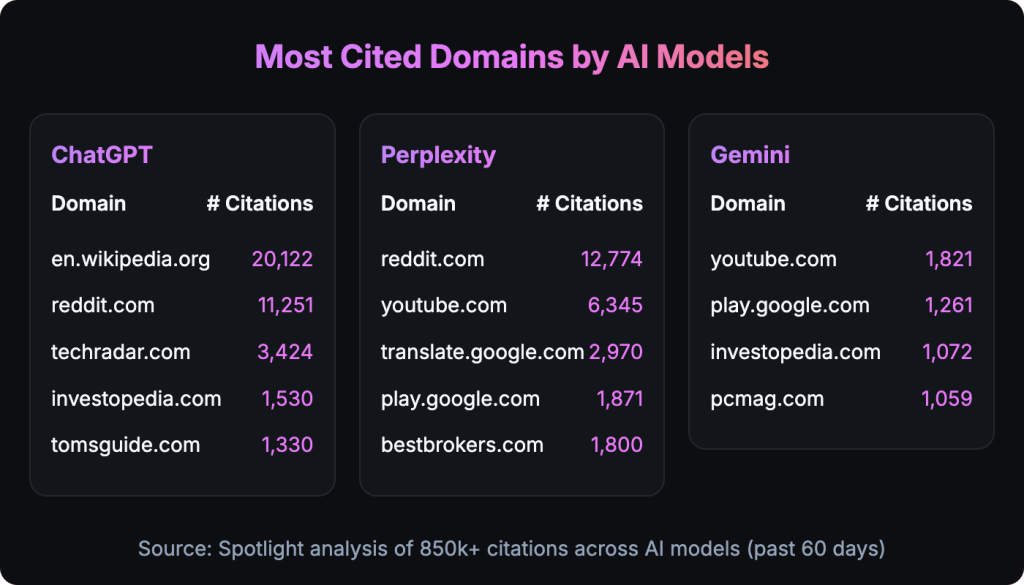

Citation feedback loops. Sources cited more often become more likely to be retrieved later, independent of quality parity. This is network effect behaviour inside the model. Early mover benefit is mathematical as opposed to marketing folklore.

Partnership and pipeline lock-in. Integrations across search APIs and content partners impose switching costs measured in months and model regressions. Once the platform picks a stack, reshuffling the deck is rare. Your window is before the pick.

Habit formation and default bias. High-value cohorts (knowledge workers; professional buyers; premium consumers) settle into “ask the model first” workflows within 45–90 days. When the tool becomes the default colleague, the brands it habitually recommends become the user’s default consideration. We all know from experience that defaults are sticky even when switching is “free.” When switching has any friction; login, new UI, uncertainty, defaults dominate.

Add those mechanisms and you get an exponential Cost of Late Entry curve: the longer you wait, the more you must spend, and the less your spend can achieve. It is not linear catch-up; it is paying more to get less.

The measurement trap: managing to the wrong variable

Executives love clarity, and “percent of traffic from LLMs” is a clear number. It is also the wrong number at this stage. Consider four distortions:

Disintermediated success. If an LLM cites your brand, answers the user, and the user decides without clicking, you acquired awareness without traffic. That is success that looks like nothing in GA4.

Attribution leakage. A meaningful share of LLM-originating visits arrives as “direct” or untagged because of in-app browsers, API flows, and privacy policies. Your tidy pie chart is lying to you with a straight face.

Segment skew. Early AI users are disproportionately decision-makers and high-value spenders. Measuring volume without weighting value is unit-economics malpractice.

Time-to-lock-in. The KPI that matters is not last-click revenue; it is Time-to-Citation-Lock-in: how quickly you become part of the machine’s default retrieval set before feedback loops harden.

Put differently: the metrics that tell you to wait are inherently lagging; the metrics that tell you to move are leading and noisier. Strategy is choosing which noise to trust.

What changes for brand building

Mental availability still matters. The mechanism changes. Historically you built it with reach and distinctive assets so that when a buyer entered a category, your brand “came to mind.” In conversational interfaces, the model’s memory is the gatekeeper to the human’s memory. The practical translation:

Distinctive assets remain crucial (they are the hooks that communicate signal quickly), but you must encode them in machine-parsable form, consistent product names, canonical claims, structured specs, conflict-free facts across touchpoints.

Category entry points (CEPs) still matter, but they must be mapped to query intents expressed as questions (“Which CRM for a 50-person sales team with heavy outbound?”) rather than keywords (“best crm small business”).

Broad reach still creates salience, yet citation frequency across trusted nodes (reference sites, standards bodies, credible reviewers) is now the shortest path into the model’s retrieval pathways. You need the model to “remember” you when it answers, even if the human does not click.

When query-to-decision velocity compresses (e.g., from eight touchpoints to two), the premium on first impression explodes. The brand’s job is to be in those first two answers. Everything else is theater.

Two tiers are emerging, and you must pick

A candid look at the retrieval stack shows a two-tier market forming:

Tier 1 (Participation Rights): API/data partners, canonical data providers, citation-optimised publishers. Benefits: priority indexing, fast retrieval, enhanced attribution, higher citation probability, occasionally preferential formatting in answers.

Tier 2 (Commodity Access): Everyone else on the open web. Benefits: crawl inclusion with lag; unpredictable refresh; citation subject to chance and popularity elsewhere.

This is not a Moral judgments no longer suffice; LLM architecture is the new reality. The strategic question is simple: do you pursue participation rights, or do you accept commodity status and plan to outspend it later? The former is a partnership and data discipline. The latter is a marketing tax with compounding interest.

Predictions

Platform consolidation: Within 24 months, three to five answer engines will control \>80% of AI-mediated discovery in Western markets (e.g., GPT-native search, Gemini/Google, Claude/Anthropic-aligned, and one “open web” challenger with strong browsing/citation transparency). Fragmentation beyond that is noise.

Budget reallocation: By mid-2026, leading CMOs will allocate 10–15% of “search/SEO” spend to LLM Visibility Programs, including structured data pipelines, content refactors for citation-readiness, and API/partnership fees. By 2027 it will present in board decks as a standard line item.

New KPI canon: “Brand Presence” (share of relevant queries where your brand appears) and “Partnership Advantage Ratio” (relative citation uplift from direct integrations) become standard competitive benchmarks; tool vendors and analyst firms will normalise them as category metrics.

Retail and B2B shortlists compress: In categories where decision cycles can safely compress (consumer electronics accessories, SaaS categories with clear ICP fit), LLM answers will reduce the average number of visited options by 30–50%. Shelf space shrinks. Being off the shelf is existential.

Late-entry tax becomes visible: By 2027, categories with meaningful LLM presence will exhibit a 3–10x cost premium for brands trying to enter the default set post-lock-in (seen as sustained SOV loss despite escalated spend). Analysts will misattribute this to “creative fatigue” or “market saturation”; the underlying cause will be retrieval position.

Strategy: a 180-day program any CEO can mandate

Let’s forget about moonshots for the moment and take a look at disciplined plumbing, organisational clarity, and a few hard trade-offs.

Days 0–30: Governance, baselines, and data hygiene

Appoint a Head of AI Discoverability (reporting to the CMO with dotted line to Product/Data). Give them budget, cross-functional remit and a powerful platform like Spotlight for a competitive advantage.

Establish source of truth for product facts, claims, specs, and pricing. Build a daily export to a public, versioned, machine-parsable endpoint (JSON + schema).

Run a Citation Audit across top answer engines and key prompts (category CEPs, competitor comparisons, buyer use cases). Score presence, position, consistency, and conflicts.

Days 31–90: Structured presence and retrieval readiness

Refactor top 50 evergreen pages into citation-ready objects: clear claims → evidence → references; canonical definitions; unambiguous names; inline provenance.

Publish a Developer-grade Product Catalog (public or gated to partners) with IDs, variants, filters, and canonical images. Think “docs” for your products.

Pursue one material partnership (e.g., data feed to a relevant answer engine, vertical marketplace, or respected standards body). Pay the opportunity cost of openness where needed.

Days 91–180: Feedback loops and compounding

Launch a Prompt-set QA: a stable suite of 200–500 prompts representing your buying situations. Track citation rate weekly in Spotlight. File model feedback where supported.

Build a Citation Network Plan: placements in high-authority reference nodes (credible reviewers, associations, comparison frameworks). Not sponsorships, structured content with provenance.

Pilot AI-native formats (decision tables, selector tools, explorable calculators) that answer engines love to cite. Ship them under your domain with clear licenses.

Iintegrate this with brand: keep your distinctive assets consistent across the structured outputs. The machine needs to see the same names, colorus, claims, and relationships as the human.

Organisational implications (the part nobody likes)

Product owns facts. Marketing cannot be the fixer of inconsistent facts. Product and Data must own canonical truth; Marketing packages it.

Legal becomes an enabler. Tighten claims to what you can prove and source. Over-lawyered ambiguity is now a visibility bug.

Analytics changes its job. Build pipelines to detect AI-sourced visits and to estimate dark-referral uplift. Stop using “percent of traffic from LLMs” as a go/no-go gate.

Agency relationships evolve. Brief agencies on citation engineering and partnership brokering, not just copy and backlinks. Insist on prompt-set QA in retainer scopes.

Now for a brief breathe, pause and exploration of a contrarian view just to test our thesis

Could LLM search fizzle like voice? Possibly. Falsifiers would include: persistent factual error rates that erode trust; regulatory bans on model outputs for product categories; or a consumer reversion to direct search due to cost or latency spikes. If any of those stick, traffic will remain with traditional search, and this investment will look early.

But the option value of early presence is high and the bounded downside of disciplined investment is modest. A 10–15% budget carve-out spread across hygiene, structure, and one partnership yields reusable assets: cleaner facts, faster site, better catalogs, and a partner network that also benefits traditional search and retail syndication. In other words, even in the “LLM underperforms” world, you keep the plumbing upgrades.

The revealed preferences of incumbents also matter: if the platform that profits most from clicks is embracing AI answers that reduce clicks, you should infer the direction of travel.

The CEO’s decision: speed over certainty

Great strategy is often choosing when to be precisely wrong versus roughly right. Here the choice is blunter: be early and compounding, or be late and expensive. You are not deciding whether LLM traffic matters; you are deciding whether defaults will be set without you.

Translate that to a board slide:

Goal: Achieve ≥30% citation rate across core buying prompts within six months in the top three answer engines serving our category.

Levers: Canonical data feed live in 60 days; one material partnership signed in 90; top 50 pages refactored to citation-ready objects; prompt-set QA operational.

Risks: Over-exposure of data; partnership dependence; shifting retrieval standards.

Mitigations: License terms; multi-platform strategy; quarterly schema reviews; budget ceiling of 15% of search program.

Payoff: Presence in the compressed consideration set; reduced CAC volatility as answer engines normalise; durable retrieval position before feedback loops harden.

Pricing power migrates to the shortlist. When decisions compress, demand concentrates on defaults. Brands on the list can sustain price; brands off it compete only on discount and direct response.

Moats look like boring plumbing. The edge is not a clever ad. It is a clean product catalog, consistent naming, fast indices, and contracts your competitors delayed.

Measurement must graduate. Treat traffic as a downstream dividend. Manage to citation rate, partnership advantage, and time-to-lock-in. Report them to the board.

Agencies and tools will re-segment. New winners will be those that operationalise structured truth and retrieval QA, not just backlink alchemy. Expect consolidation around vendors who can prove citation lift.

Optionality has a clock. Windows close silently. If your decision-to-execution cycle is \> six months, the only winning move is to start now.

When your competitor is building machine memory while you’re awaiting human clicks, you are not in the same game. You’re playing last year’s sport on this year’s field.