As AI assistants like ChatGPT, Google AI Overviews, and Claude become primary discovery channels, brands need specialized tools to track and optimize their visibility. Generative Engine Optimization (GEO) platforms have emerged as the essential infrastructure for understanding how your brand appears in AI-generated responses, monitoring citations, and proactively improving your presence across conversational interfaces.

The GEO landscape has rapidly expanded throughout 2025, with solutions ranging from budget-friendly monitoring tools to enterprise-grade platforms offering deep analytics, prompt volume intelligence, and optimization workflows. This comprehensive guide covers 25 leading platforms, each with distinct strengths in coverage, pricing, and capabilities.

Below, you’ll find a quick-reference table comparing all platforms, followed by detailed deep dives into each solution. Whether you’re a solopreneur tracking basic mentions or an enterprise team building a comprehensive GEO strategy, this index will help you identify the right platform for your needs.

| Platform | Starting Price (2025 Est.) | Models Covered | Improve or Monitor | Prompt Volume Data | Languages |

|---|---|---|---|---|---|

| Spotlight | Free tier; Paid ~$49/mo | ChatGPT, Google AI Overview, Gemini, Claude, Perplexity, Grok, Copilot, Aimode | Also Improve | Yes | All languages |

| Profound | ~$99/mo | ChatGPT | Also Improve | Yes | Unknown |

| Scrunch AI | ~$300/mo | Every LLM (not specified) | Also Improve | No | Multiple (not listed) |

| Peec AI | ~€89/mo (~$95) | ChatGPT, Perplexity, Deepseek | Also Improve | Yes | Unknown |

| Hall | Free tier; Paid ~$49/mo | ChatGPT, Perplexity, Gemini, Copilot, Claude, DeepSeek | Also Improve | Unknown | Unknown |

| Otterly AI | ~$29/mo | Unknown | Unknown | Unknown | Unknown |

| Promptmonitor | ~$29/mo | ChatGPT, Claude, Gemini, DeepSeek, Grok, Perplexity, Google AI Overview, AI Mode | Also Improve | No | Unknown |

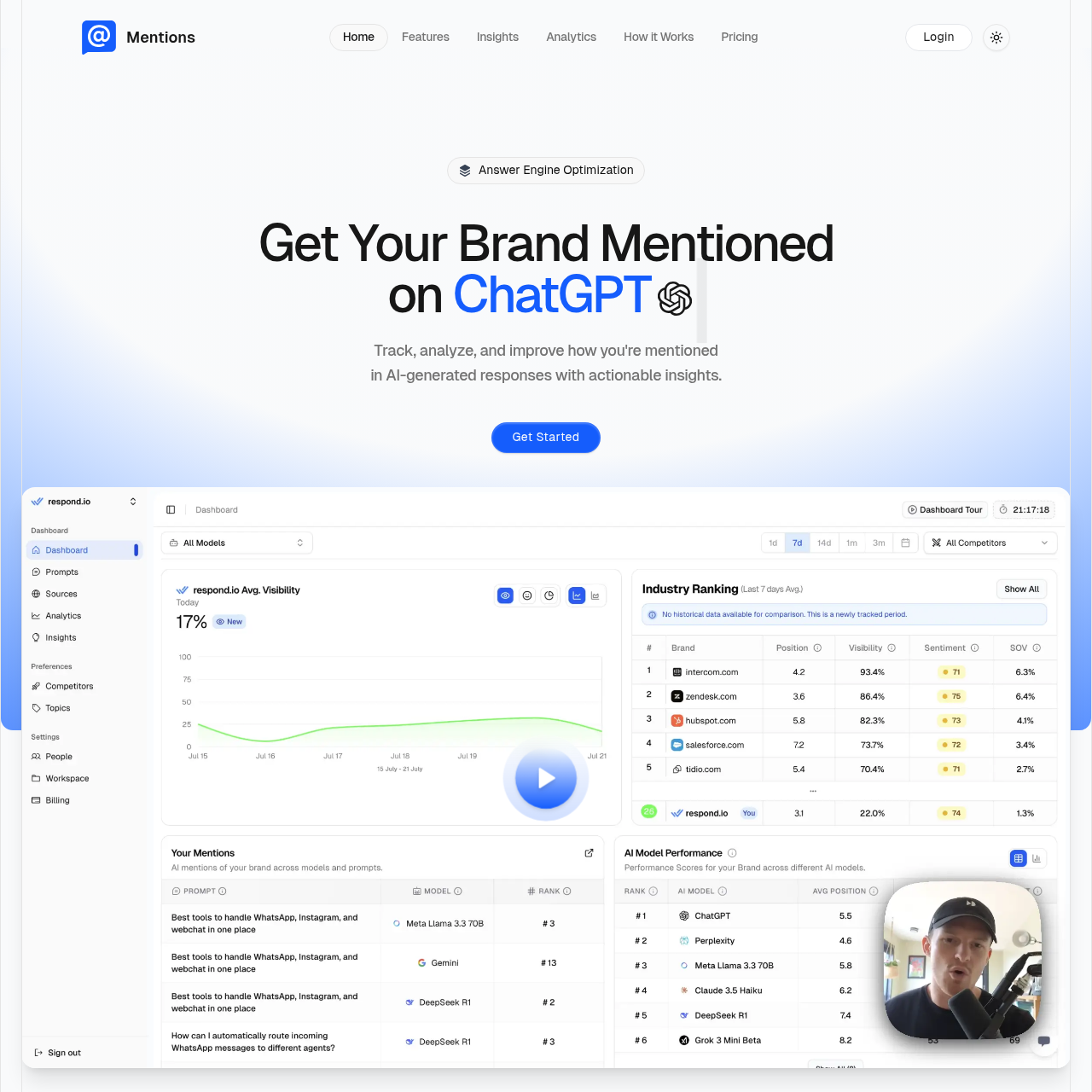

| Mentions.so | ~$49/mo | Unknown | Unknown | Unknown | Unknown |

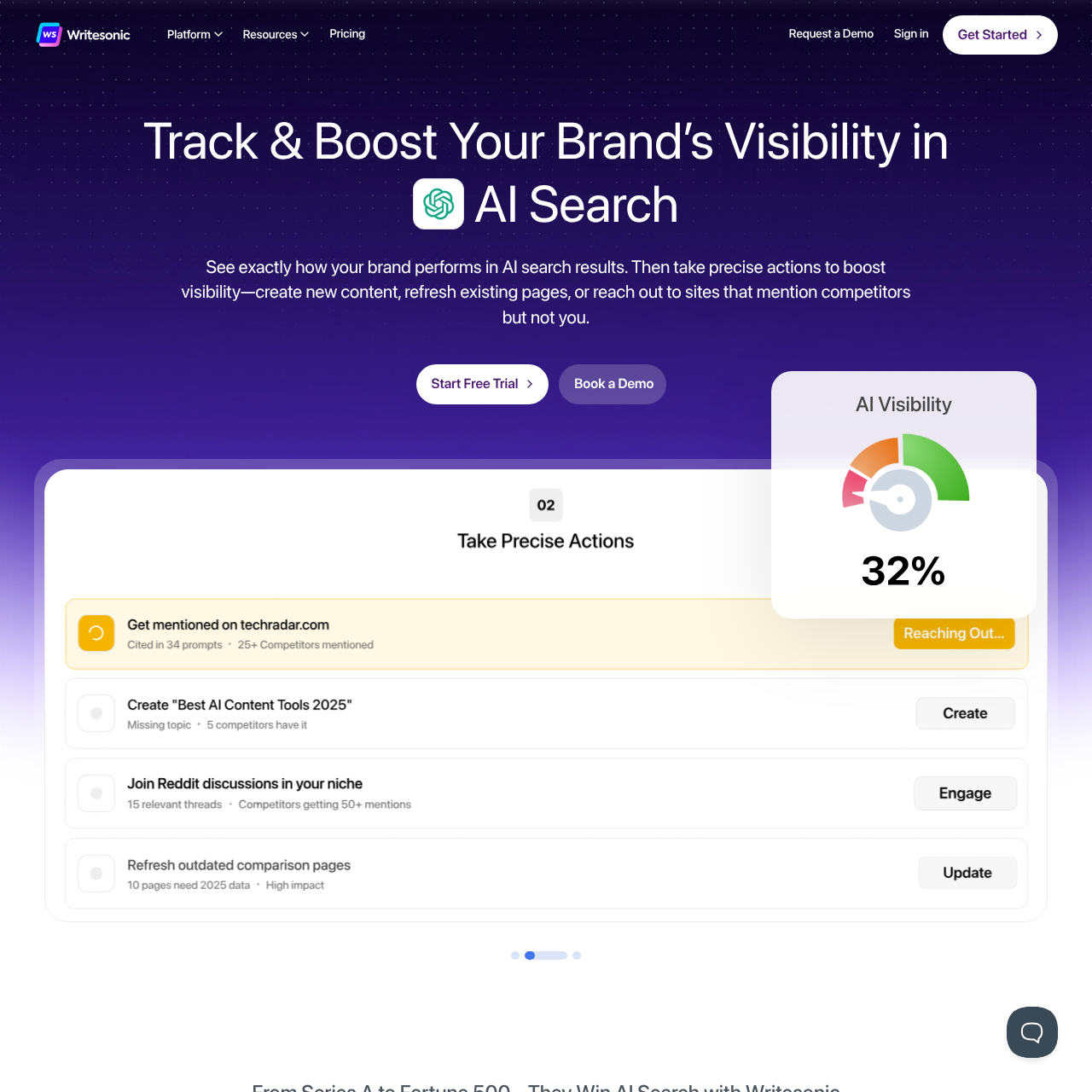

| Writesonic | ~$79/mo | ChatGPT, Gemini, Perplexity, 10+ platforms | Also Improve | Yes | Unknown |

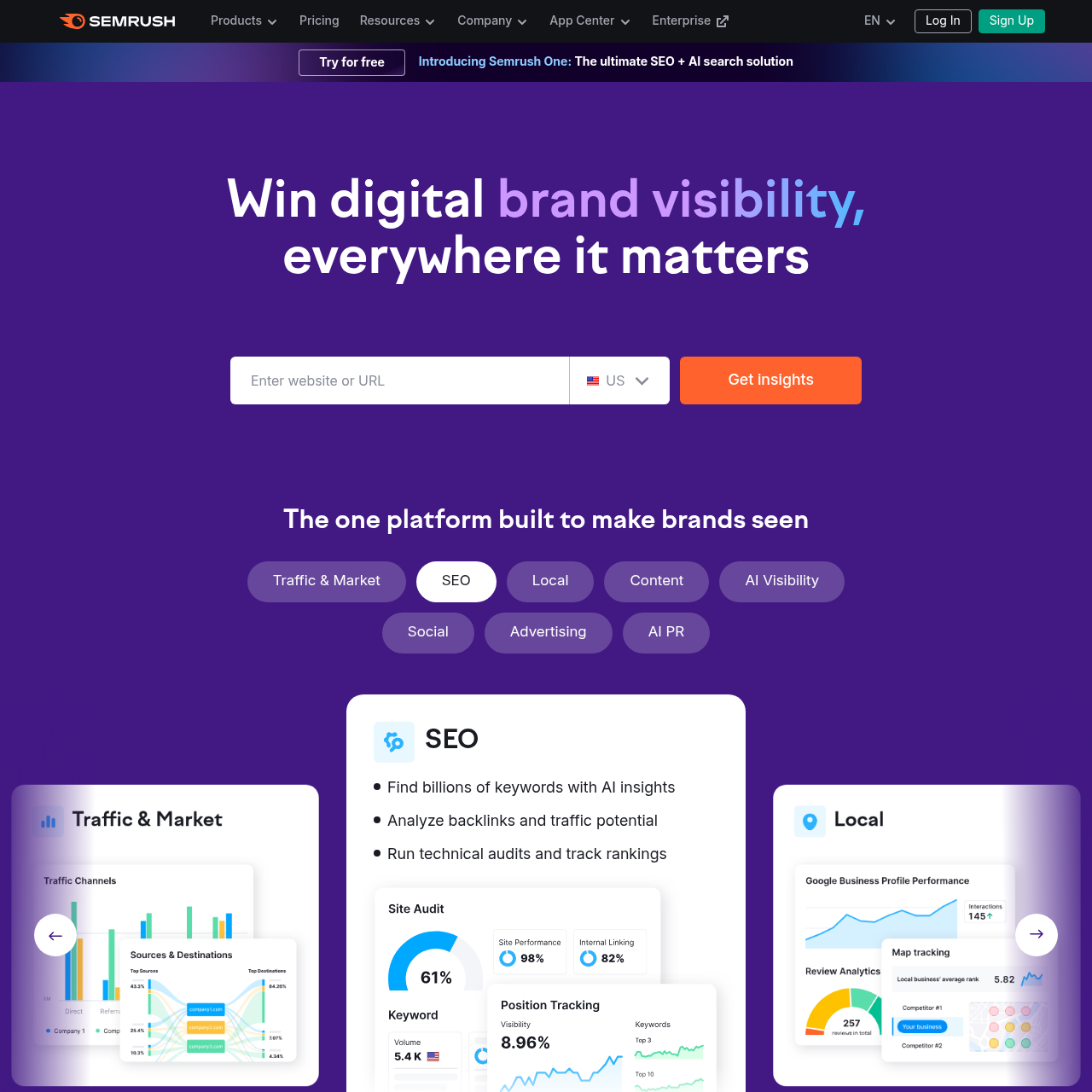

| Semrush AI Toolkit | ~$99/mo (add-on) | ChatGPT, Perplexity | Also Improve | Yes | Unknown |

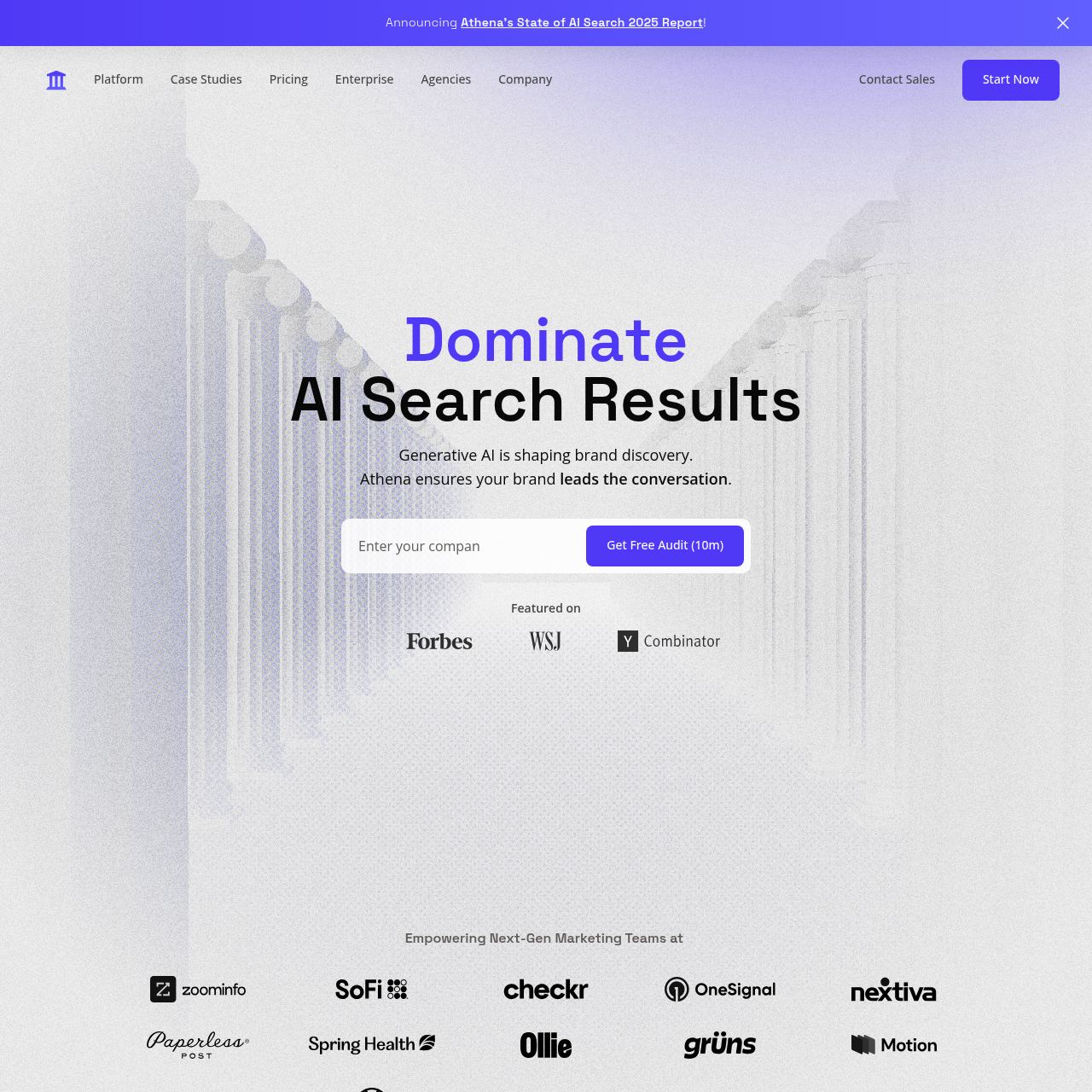

| AthenaHQ | ~$295/mo | ChatGPT, Perplexity, Google AI Overviews, AI Mode, Gemini, Claude, Copilot, Grok | Also Improve | Unknown | Unknown |

| Peekaboo | ~$100/mo | Unknown | Unknown | Unknown | Unknown |

| MorningScore | ~$49/mo | ChatGPT, Google AI Overviews | Also Improve | Unknown | Unknown |

| SurferSEO | ~$79/mo | Google, ChatGPT, other AI chats | Also Improve | Unknown | English, Español, Français, Deutsch, Nederlands, Svenska, Dansk, Polski |

| Airank | ~$49/mo | Unknown | Unknown | Unknown | Unknown |

| AmionAI | Beta; ~$50/mo | ChatGPT | Also Improve | No | Multi-language (not listed) |

| Authoritas AI Search | ~$119/mo | ChatGPT, Gemini, Perplexity, Claude, DeepSeek, Google AI Overviews, Bing AI | Also Improve | Unknown | Multilingual (customizable) |

| ModelMonitor | ~$49/mo | Unknown | Unknown | Unknown | Unknown |

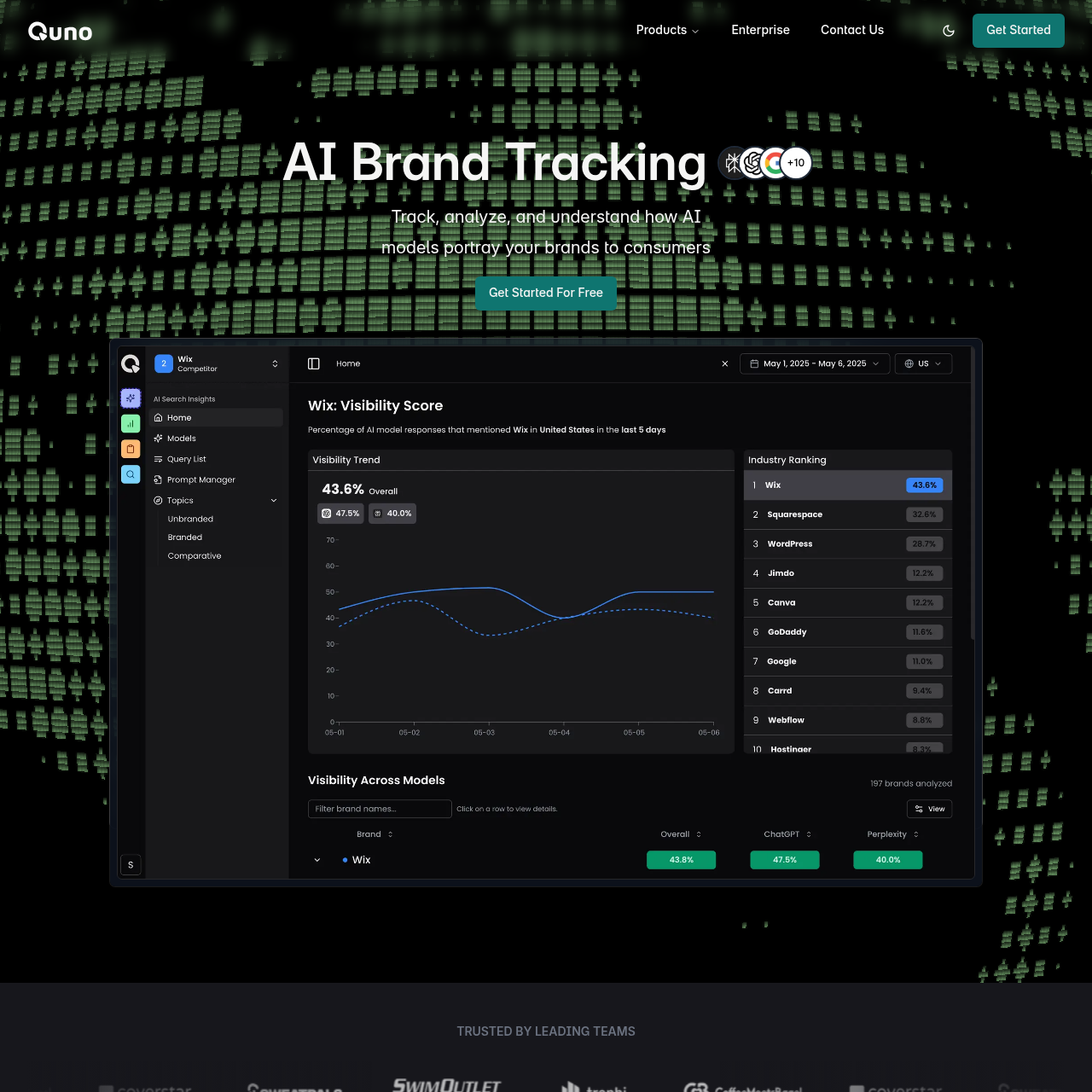

| Quno | ~$99/mo | Unknown | Also Improve | Unknown | Unknown |

| RankScale | Beta; ~$79/mo | Unknown | Unknown | Unknown | Unknown |

| Waikay | ~$19.95/mo | ChatGPT, Google, Claude, Sonar | Also Improve | No | 13 languages |

| XFunnel | ~$199/mo | ChatGPT, Gemini, Copilot, Claude, Perplexity, AI Overview, AI Mode | Also Improve | Yes | Unknown |

| Clearscope | ~$170/mo | ChatGPT, Gemini | Also Improve | Unknown | Unknown |

| ItsProject40 | ~$50/mo | Unknown | Unknown | Unknown | Unknown |

| AI Brand Tracking | ~$99/mo | Unknown | Unknown | Unknown | Unknown |

Now let’s dive deeper into each platform. The following sections provide comprehensive details on pricing, model coverage, key features, and use cases for all 25 GEO solutions. This will help you understand not just what each tool does, but how it fits into different marketing workflows and team structures.

Platform Deep Dives

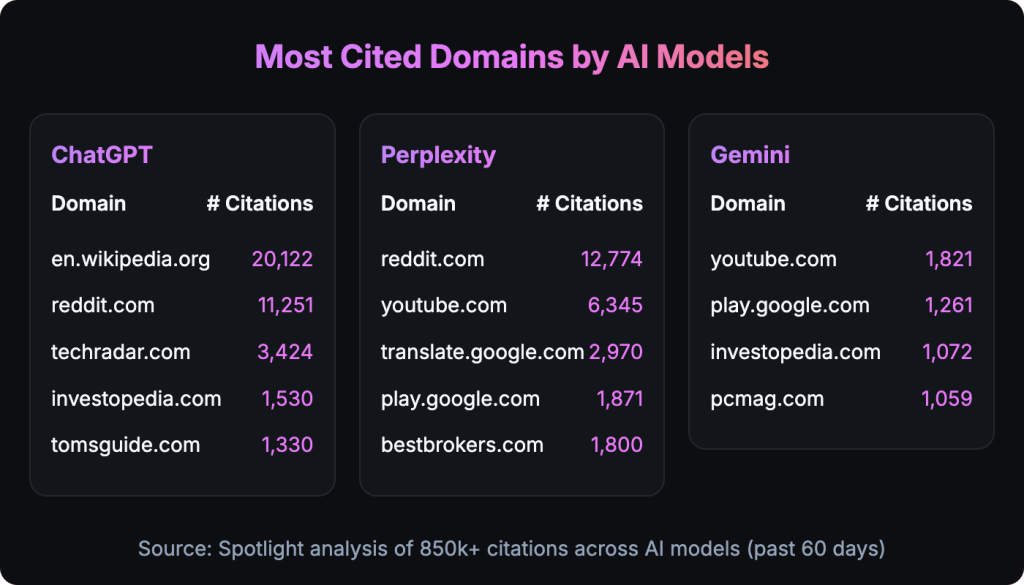

Spotlight

Spotlight tracks and optimizes brand visibility across AI chatbots and conversations, with GEO/AEO solutions for boosting mentions and citations. The platform combines comprehensive monitoring with proactive optimization, surfacing citation gaps and geo-targeting opportunities while supplying prompt volume analytics across every major AI assistant. With support for all languages and coverage of 8+ major AI platforms, Spotlight is purpose-built for teams that need both deep insights and actionable optimization workflows. The free tier makes it accessible for early-stage brands, while paid plans unlock advanced features for growing teams.

MonitorOptimizePrompt VolumeAll Languages

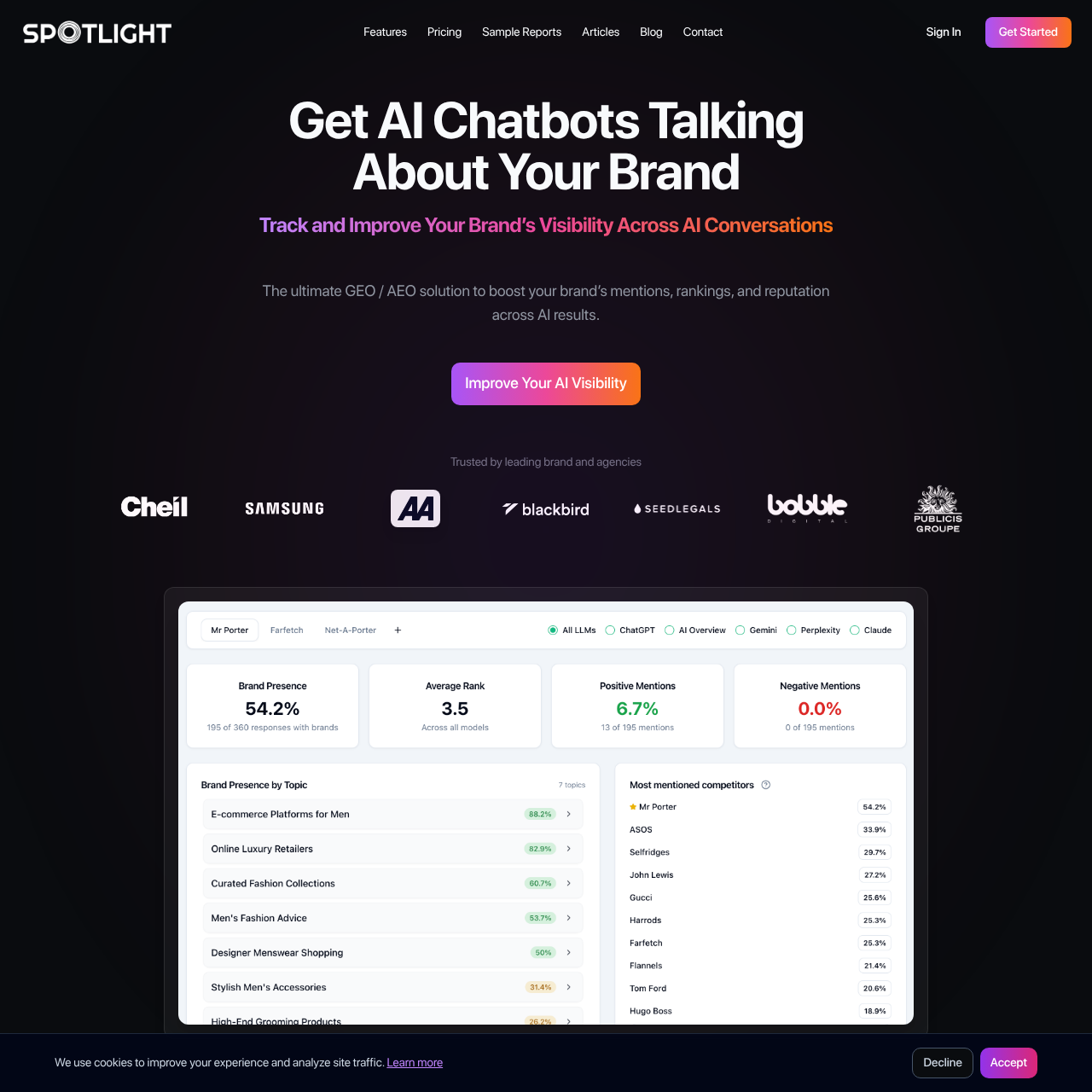

Profound

Profound monitors brand presence in AI-generated answers, providing prompt volumes, conversation insights, and optimization for LLMs like ChatGPT and Perplexity. The platform focuses on conversation-level intelligence within ChatGPT, pairing detailed prompt volume tracking with actionable optimization guidance for brand and competitor mentions. While coverage is currently focused on ChatGPT, Profound delivers deep insights for brands that prioritize this high-traffic platform. The platform is ideal for teams seeking detailed prompt analytics and optimization strategies specifically within OpenAI’s ecosystem.

Prompt IntelligenceOptimizationChatGPT Focus

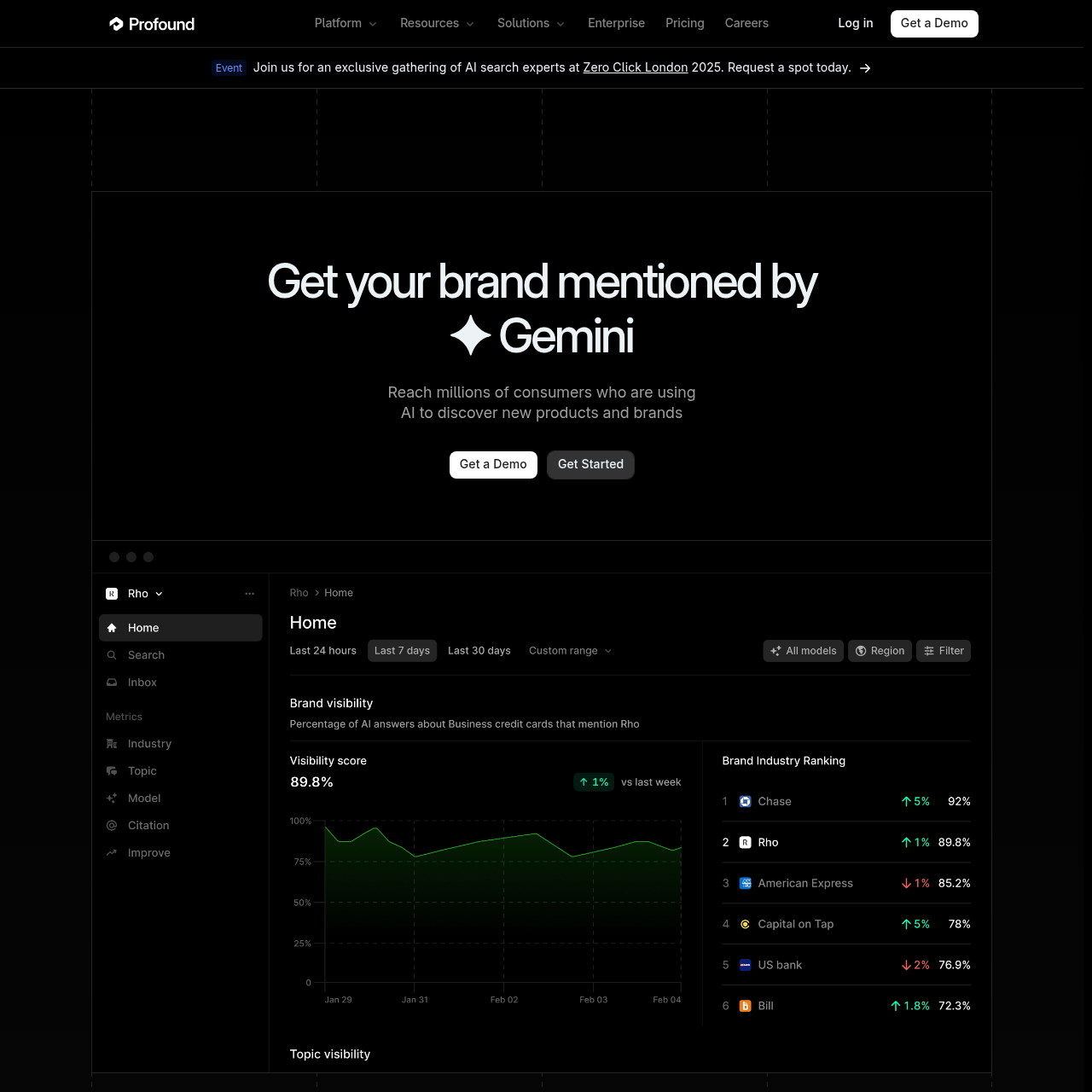

Scrunch AI

Scrunch AI provides proactive monitoring and optimization for AI search results, identifying content gaps, misinformation, and improvements across platforms like ChatGPT and Google AI Overviews. The platform leans into enterprise-grade content intelligence, flagging misinformation, content gaps, and optimization opportunities across AI search experiences. With broad coverage across every major LLM and a focus on content quality and accuracy, Scrunch AI is positioned for larger organizations that need comprehensive visibility and content strategy guidance. The higher price point reflects its enterprise positioning and extensive coverage.

EnterpriseContent IntelligenceBroad Coverage

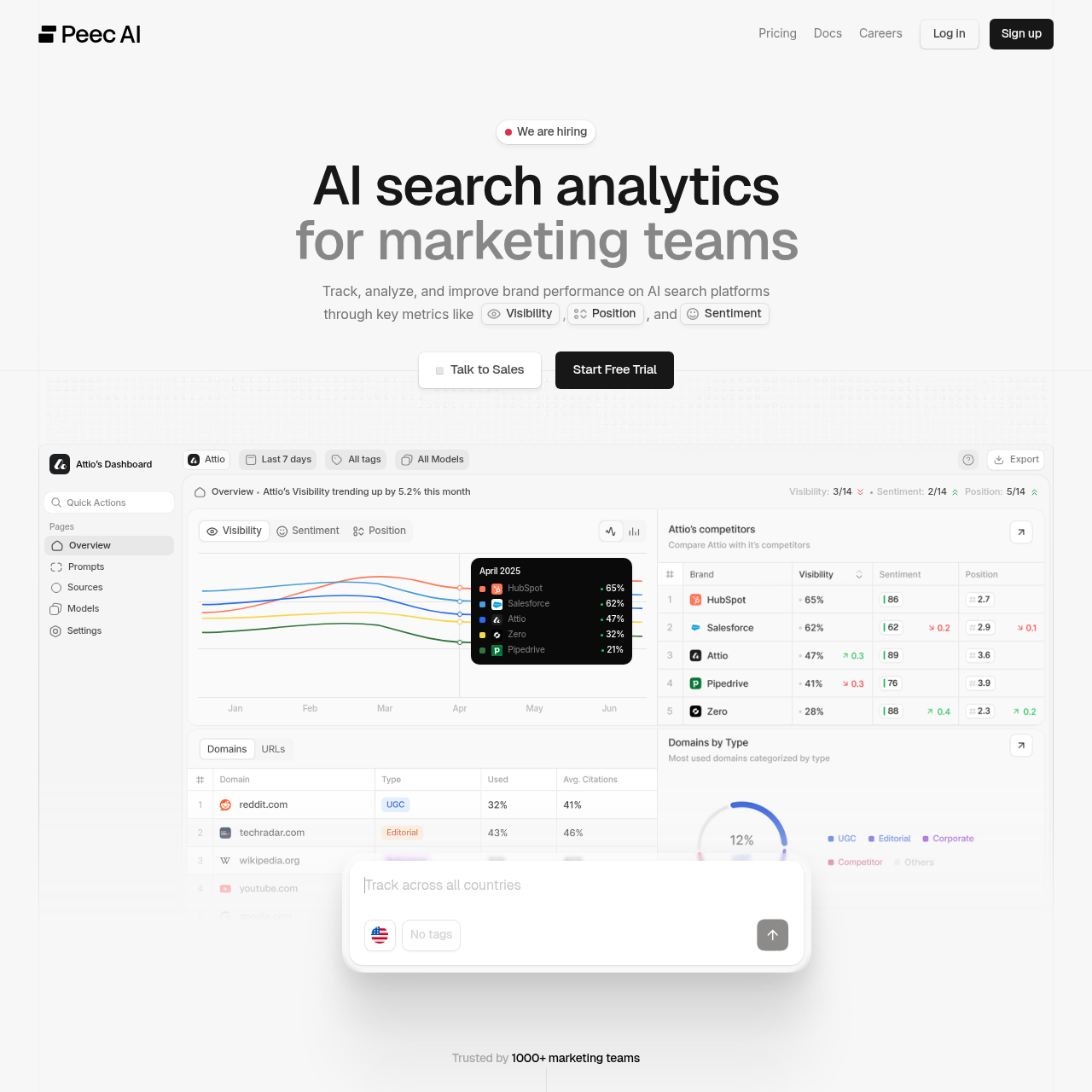

Peec AI

Peec AI tracks visibility, benchmarks competitors, analyzes sources, and provides trends in AI engines like Claude and Gemini, with prompt suggestions and multi-language support. The platform emphasizes competitive benchmarking, trend analysis, and actionable prompt suggestions to steer content strategies across multilingual markets. With coverage of ChatGPT, Perplexity, and Deepseek, plus prompt volume data, Peec AI offers a balanced approach to monitoring and optimization for international brands looking to understand their competitive position across multiple AI platforms.

BenchmarkingPrompt SuggestionsCompetitive Analysis

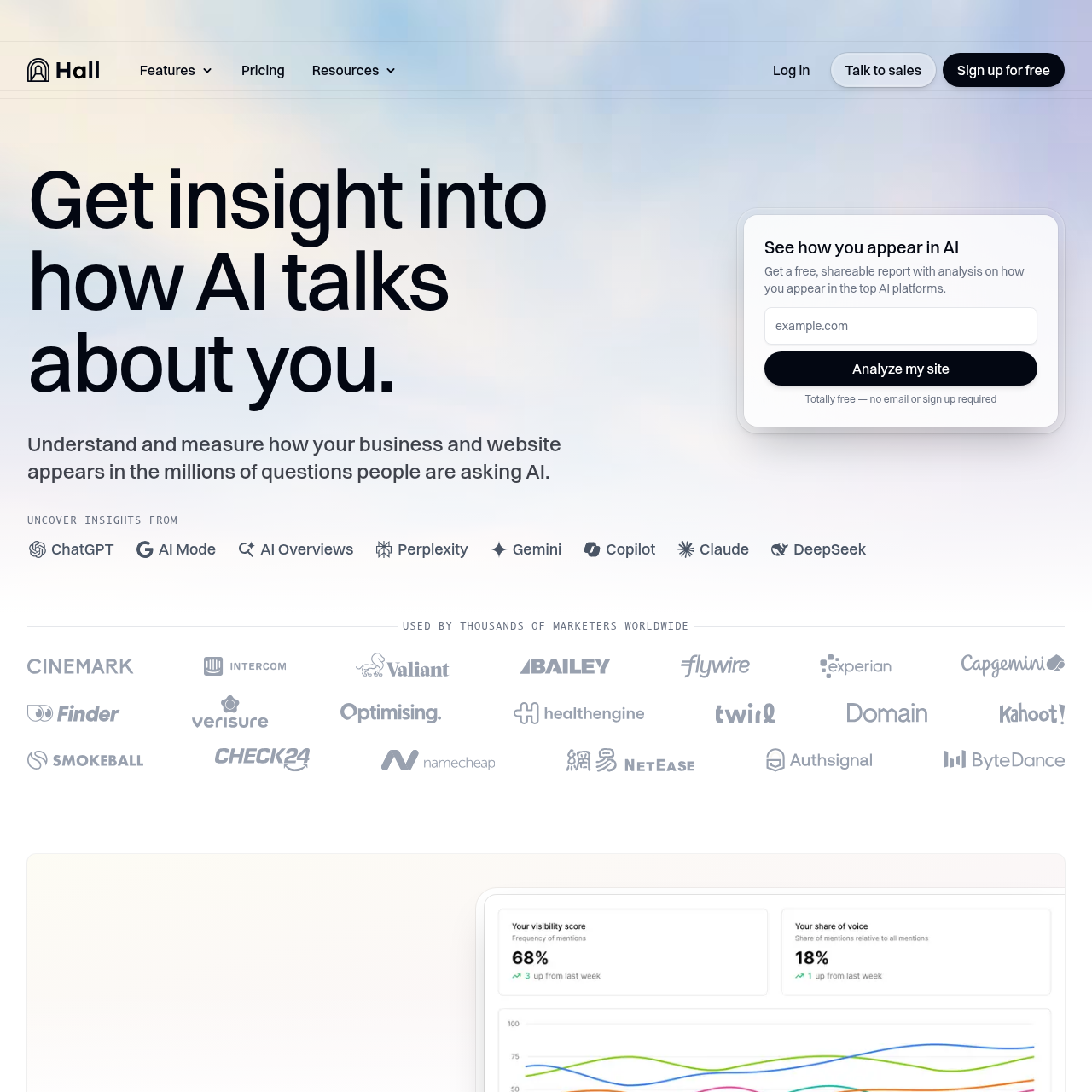

Hall

Hall provides beginner-friendly tracking of brand mentions, citations, and AI agent behavior, with real-time dashboards and a free tier for small teams. Designed for smaller teams and solopreneurs, Hall offers intuitive real-time dashboards for monitoring mentions and agent behaviors with an approachable workflow. The platform covers six major AI assistants including ChatGPT, Perplexity, Gemini, Copilot, Claude, and DeepSeek, making it a solid entry-level option for brands just starting their GEO journey. The free tier makes it accessible, while paid plans unlock additional features for growing teams.

SMBDashboardsFree Tier

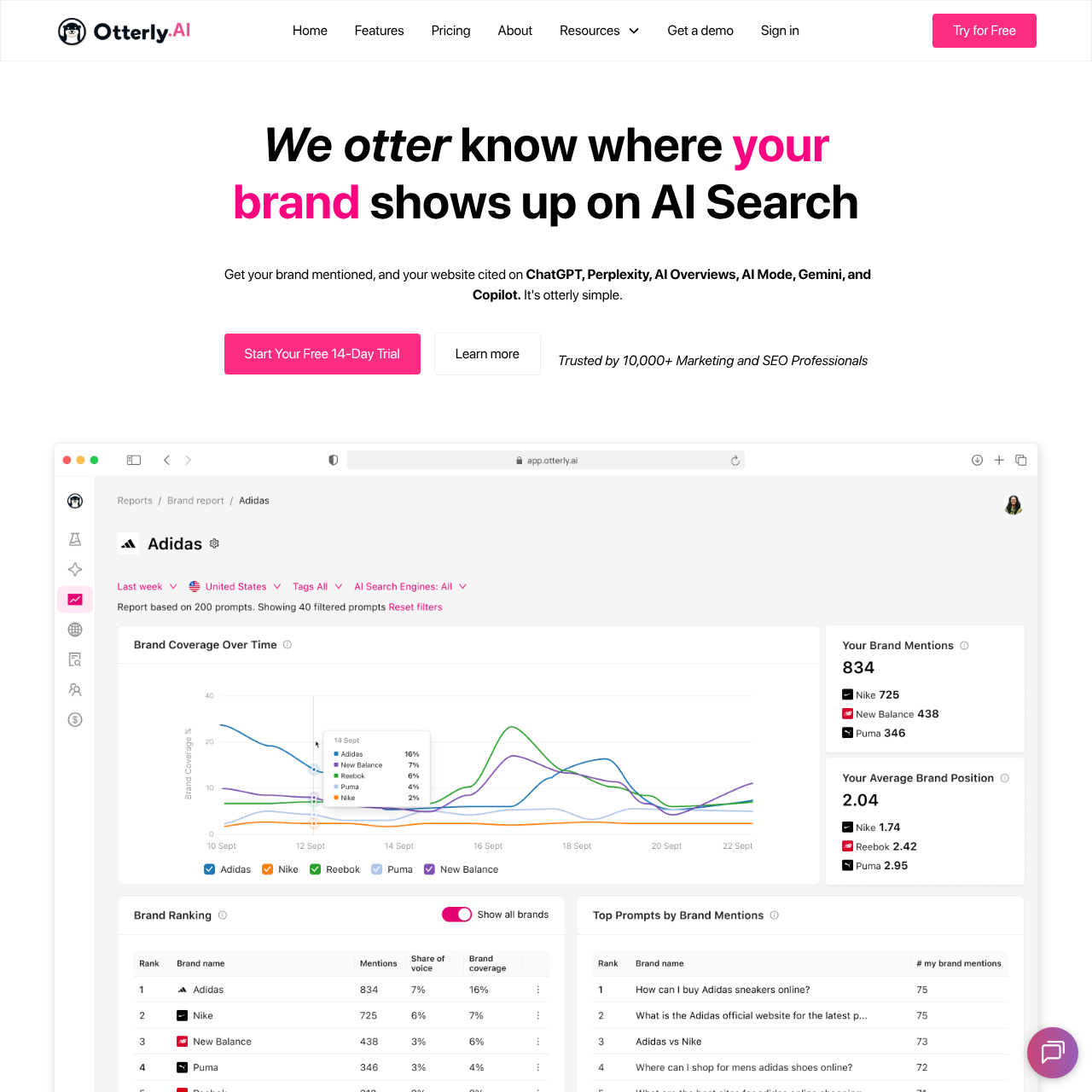

Otterly AI

Otterly AI monitors mentions in AI overviews and chatbots, with keyword research, reports, citation analysis, and prompt generation for solopreneurs and small teams. The platform targets budget-conscious solopreneurs with essential keyword research, citation analysis, and prompt creation tools to surface quick wins in AI search. At $29/month, it’s one of the most affordable options in the market, making GEO accessible for individual operators and very small teams. While specific model coverage details aren’t publicly disclosed, the platform focuses on core monitoring and optimization features that deliver immediate value.

SolopreneurKeyword ResearchBudget-Friendly

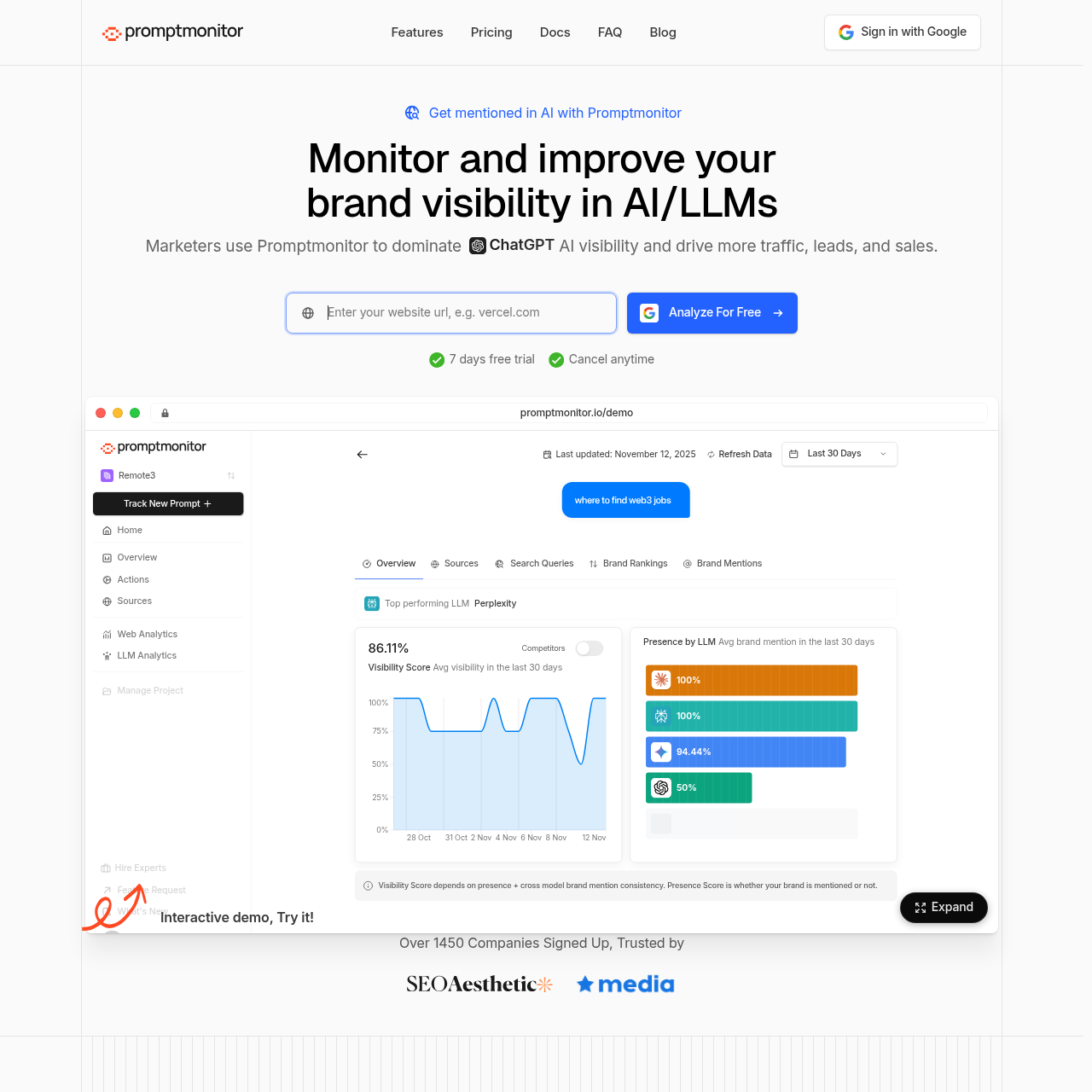

Promptmonitor

Promptmonitor offers multi-model prompt tracking across 8+ LLMs, AI crawler analytics, competitor monitoring, and source discovery with SEO metrics. The platform blends AI crawler analytics with SEO-integrated metrics, delivering a hybrid view of search and generative visibility. With coverage of 8+ major AI platforms including ChatGPT, Claude, Gemini, DeepSeek, Grok, Perplexity, Google AI Overview, and AI Mode, Promptmonitor provides comprehensive multi-model tracking at an affordable price point. The SEO integration makes it particularly valuable for teams already focused on traditional search optimization.

SEO HybridCompetitor MonitoringMulti-Model

Mentions.so

Mentions.so provides AI traffic analytics, performance updates, and white-label reports for tracking brand citations in generative AI. The platform packages comprehensive AI traffic analytics with white-label reporting capabilities, making it specifically tailored for agencies seeking professional client-facing GEO dashboards. While specific model coverage and language support details aren’t publicly disclosed, the platform’s focus on reporting and analytics makes it ideal for agencies that need to present GEO insights to multiple clients in a branded format.

AgencyReportingWhite-Label

Writesonic

Writesonic offers GEO content creation and optimization, with AI article writing, topic analysis, and scanners for improving visibility in AI responses. The platform extends its established content engine with GEO-specific tooling, offering article generation tuned for AI responses and comprehensive visibility scans. With coverage of ChatGPT, Gemini, Perplexity, and 10+ additional platforms, plus prompt volume data, Writesonic combines content creation capabilities with GEO monitoring and optimization. This makes it ideal for content teams that want to both create and optimize content specifically for AI visibility.

Content GenerationOptimizationPrompt Volume

Semrush AI Toolkit

Semrush AI Toolkit is an add-on for prompt tracking, brand performance reports, and strategic insights integrated with broader SEO tools. As an expansion of Semrush’s comprehensive SEO suite, the AI Toolkit integrates prompt tracking and performance reports directly within familiar workflows. With coverage of ChatGPT and Perplexity, plus prompt volume data, it’s designed for teams already using Semrush who want to extend their SEO strategy into GEO. Note that this requires a base Semrush subscription, making it best suited for established SEO teams looking to add AI visibility tracking to their existing toolkit.

SEO StackAdd-onIntegration

AthenaHQ

AthenaHQ provides a dashboard for GEO scores, benchmarking, content gaps, and persona-based analysis across major AI platforms. The platform offers enterprise-grade benchmarking and persona-based analysis, mapping GEO scores across multiple AI surfaces. With coverage of 8 major platforms including ChatGPT, Perplexity, Google AI Overviews, AI Mode, Gemini, Claude, Copilot, and Grok, AthenaHQ delivers comprehensive visibility tracking. The persona-based analysis feature makes it particularly valuable for brands targeting specific customer segments or markets, though the higher price point positions it for larger organizations.

EnterprisePersona InsightsBenchmarking

Peekaboo

Peekaboo offers citation tracking for PR, monitoring mentions in AI engines, competitor visibility, and gap analysis. The platform supports PR teams with comprehensive citation tracking, competitor visibility analysis, and gap identification tailored to brand storytelling. While specific model coverage and technical details aren’t publicly disclosed, Peekaboo positions itself as a PR-focused GEO tool, making it ideal for communications teams that need to understand how their brand narrative appears in AI-generated content and identify opportunities to improve visibility.

PRGap AnalysisCitation Tracking

MorningScore

MorningScore provides SEO-integrated AI visibility monitoring, tracking citations in Google AI Overviews and content optimization. The platform adds AI visibility tracking to its established SEO platform, aligning traditional search KPIs with AI overview performance. With coverage of ChatGPT and Google AI Overviews, MorningScore bridges the gap between traditional SEO and GEO, making it ideal for teams that want a unified view of their search visibility across both traditional and AI-powered search experiences. The affordable pricing makes it accessible for small to mid-sized teams.

SEO + GEOPerformance TrackingIntegrated

SurferSEO

SurferSEO offers content optimization with AI Tracker for monitoring and improving brand appearance in AI responses. The platform’s AI Tracker evaluates content readiness for AI responses, supported by multilingual optimization insights. With support for 8 languages including English, Spanish, French, German, Dutch, Swedish, Danish, and Polish, SurferSEO is particularly valuable for international brands. The platform combines its established content optimization capabilities with GEO monitoring, making it ideal for content teams that want to optimize their existing content for AI visibility.

MultilingualContent Optimization8 Languages

Airank

Airank tracks entity associations and brand rankings in grounded and un-grounded AI responses from Google and Gemini. The platform bridges knowledge graph monitoring with GEO outcomes, focusing on how entities and brands are associated and ranked in AI-generated content. While specific coverage details beyond Google and Gemini aren’t publicly disclosed, Airank’s unique approach to entity tracking makes it valuable for brands that want to understand their position within knowledge graphs and how that translates to AI visibility. The affordable pricing makes it accessible for teams exploring entity-based GEO strategies.

Entity TrackingKnowledge GraphGoogle & Gemini

AmionAI

AmionAI provides brand monitoring with competitor rank, source analysis, sentiment, and actionable insights for early-stage users. The platform targets early-stage brands with comprehensive sentiment tracking, source analysis, and competitor rankings. Currently in beta with ChatGPT coverage, AmionAI offers multi-language support and focuses on delivering actionable insights for brands just beginning their GEO journey. The beta pricing makes it accessible for startups and small teams, though the lack of prompt volume data may limit its appeal for teams that prioritize that metric.

SentimentEarly StageBeta

Authoritas AI Search

Authoritas AI Search offers comprehensive tracking of mentions, share of voice, citations, and custom prompts across multiple LLMs. The platform unifies share-of-voice tracking with custom prompts and multilingual analytics for enterprise marketing teams. With coverage of 7 major platforms including ChatGPT, Gemini, Perplexity, Claude, DeepSeek, Google AI Overviews, and Bing AI, plus language customization capabilities, Authoritas delivers enterprise-grade GEO intelligence. The share-of-voice metrics make it particularly valuable for competitive analysis and market positioning strategies.

Share of VoiceEnterpriseMultilingual

ModelMonitor

ModelMonitor tracks across 50+ AI models, with prompt radar, custom monitoring, competitor analysis, and sentiment. The platform broadens visibility with prompt radar, sentiment insights, and custom monitoring for large model portfolios. While specific model names and language support aren’t publicly disclosed, ModelMonitor’s claim of 50+ model coverage makes it one of the most comprehensive options for brands that need visibility across a wide range of AI platforms. The affordable pricing combined with extensive coverage makes it attractive for teams that prioritize breadth over depth in specific platforms.

Wide CoverageSentiment50+ Models

Quno

Quno provides response and citation analysis using synthetic personas, with sentiment and keyword insights for brand intelligence. The platform leverages synthetic personas to evaluate responses, unpack sentiment, and spotlight keyword opportunities. While specific model coverage and language support aren’t publicly disclosed, Quno’s unique persona-based approach makes it valuable for brands that want to understand how different customer segments or personas experience their brand in AI-generated content. The sentiment and keyword insights add depth to the persona analysis, making it useful for targeted marketing strategies.

PersonasSentimentKeyword Insights

RankScale

RankScale is a GEO platform for audits, performance tracking, citations, and content recommendations in AI search engines. The platform delivers comprehensive GEO audits and performance tracking with competitive benchmarking for AI search engines. Currently in beta, RankScale focuses on providing actionable insights through audits and content recommendations, making it valuable for teams that want structured guidance on improving their AI visibility. While specific model coverage isn’t publicly disclosed, the audit and recommendation features make it useful for brands seeking a strategic approach to GEO optimization.

AuditsBenchmarkingBeta

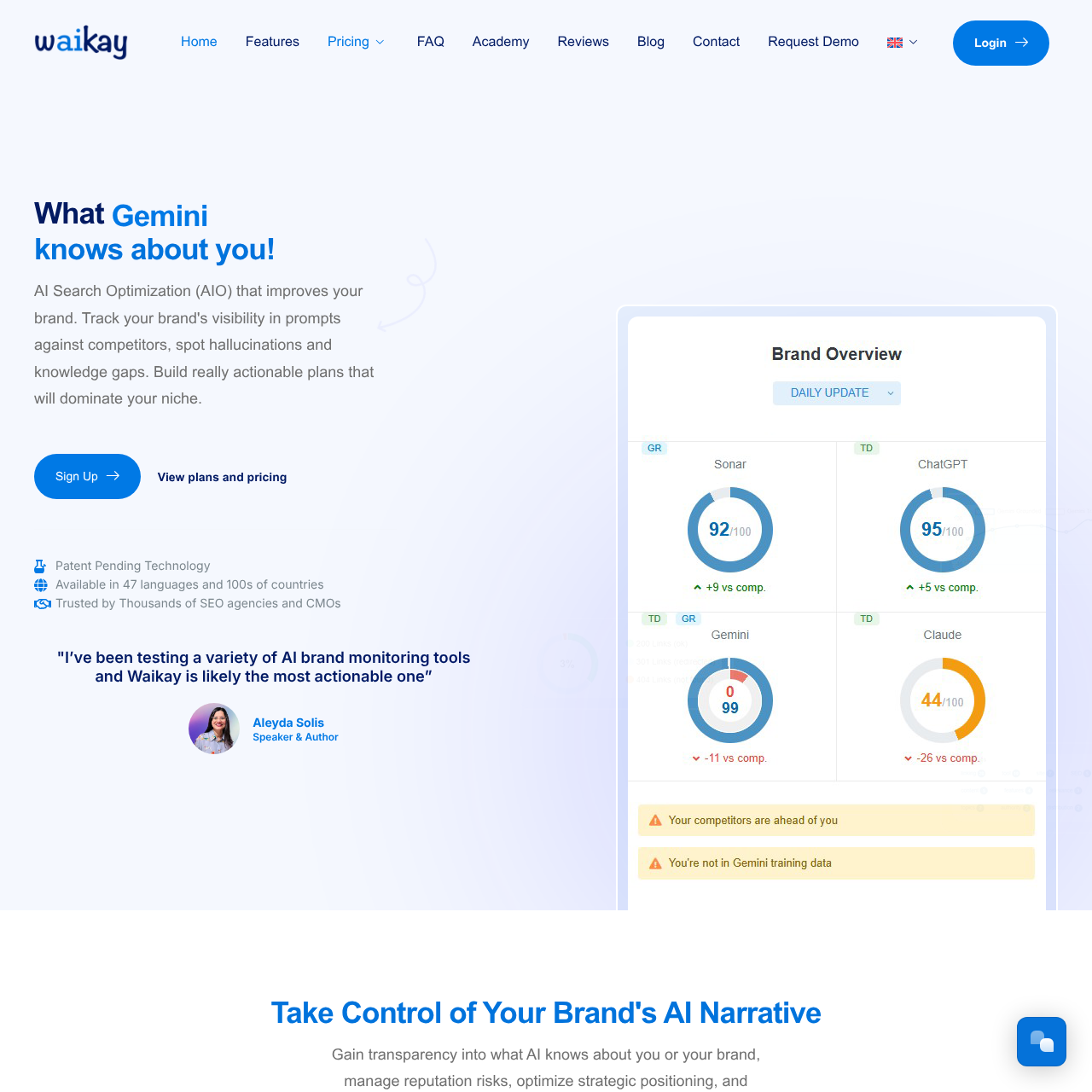

Waikay

Waikay monitors brand representation with AI Brand Score, fact-checking, competitor comparison, and knowledge graph building. The platform introduces an AI Brand Score with fact-checking and knowledge graph insights, designed for multilingual reach. With coverage of ChatGPT, Google, Claude, and Sonar, plus support for 13 languages, Waikay offers comprehensive international coverage at one of the most affordable price points in the market. The fact-checking feature is unique and valuable for brands concerned about accuracy in AI-generated content about their business.

Knowledge GraphMultilingualFact-Checking

XFunnel

XFunnel provides visibility monitoring with competitive positioning, sentiment, and query segmentation by market/persona. The platform delivers competitive positioning and market segmentation insights, blending sentiment analysis with GEO performance. With coverage of 7 major platforms including ChatGPT, Gemini, Copilot, Claude, Perplexity, AI Overview, and AI Mode, plus prompt volume data, XFunnel offers enterprise-grade competitive intelligence. The query segmentation by market and persona makes it particularly valuable for brands targeting specific customer segments or geographic markets.

Competitive IntelSentimentMarket Segmentation

Clearscope

Clearscope offers content optimization for AI visibility, with mention tracking and SEO integration. The platform couples SEO-grade content insights with GEO monitoring, helping teams refine drafts for AI responses. With coverage of ChatGPT and Gemini, Clearscope focuses on quality over quantity, providing deep content optimization guidance for brands that prioritize these high-traffic platforms. The higher price point reflects its established position in the SEO content optimization market, now extended to GEO, making it ideal for content teams already familiar with Clearscope’s workflow.

SEO + GEOContent QualityContent Optimization

ItsProject40

ItsProject40 offers AI competitive monitoring and visibility tools for brands. The platform provides AI visibility tooling focused on competitive benchmarking and brand intelligence. While specific model coverage, capabilities, and language support aren’t publicly disclosed, ItsProject40 positions itself as a competitive monitoring solution, making it valuable for brands that prioritize understanding their position relative to competitors in AI-generated content. The mid-range pricing makes it accessible for small to mid-sized teams.

Competitive MonitoringBrand Intelligence

AI Brand Tracking

AI Brand Tracking provides dedicated brand tracking in AI conversations with performance metrics. The platform zeroes in on dedicated brand monitoring across AI assistants with performance metrics tailored for marketing teams. While specific model coverage, capabilities, and language support aren’t publicly disclosed, AI Brand Tracking positions itself as a focused solution for brand monitoring, making it ideal for marketing teams that need straightforward performance tracking without the complexity of broader GEO optimization features. The pricing positions it in the mid-market range.

Brand MonitoringPerformance MetricsMarketing Focus

How to Choose the Right GEO Platform

Match Coverage to Your Footprint: Start with the AI models that drive your customers’ journeys. Platforms like Spotlight and Authoritas offer the broadest footprint, while focused solutions like Profound excel within one primary assistant.

Prioritize Visibility Gaps: Tools such as Scrunch AI and RankScale surface where you are absent in overviews or chat responses. Pair those insights with optimization-centric suites like Writesonic or SurferSEO to close gaps quickly.

Weigh Prompt Intelligence: If understanding prompt demand is critical, lean toward Spotlight, Profound, or Promptmonitor for richer prompt volume and journey analytics.

Design for Team Workflows: Agencies and enterprise teams benefit from white-label reporting (Mentions.so) or persona-based insights (AthenaHQ, Quno), whereas solo operators may prefer the simplicity of Otterly AI or Hall.

Pricing and coverage reflect the latest available data as of November 2025 and may change. Verify current plans and feature sets directly with each provider.