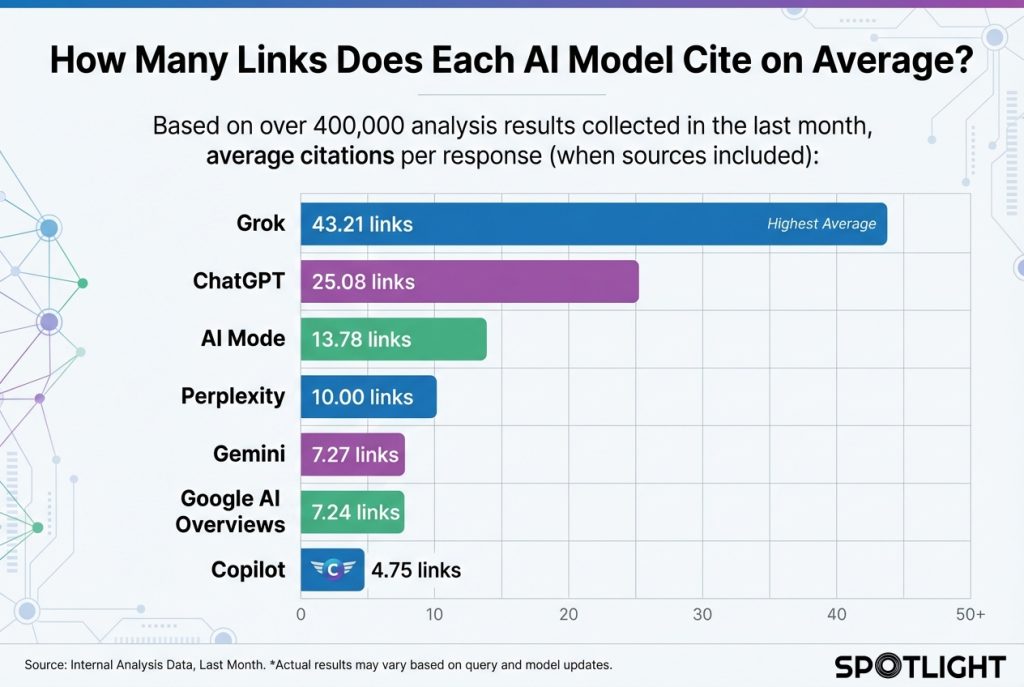

When AI chatbots answer questions, they often include links to websites as sources. But how many links does each AI model typically cite? We analyzed over 400,000 responses from the last month across 7 major AI platforms to find out.

The results show big differences between models. Some AI platforms cite many sources, while others cite few or none. Understanding these patterns helps brands know where to focus their content efforts.

How Many Links Does Each AI Model Cite on Average?

Based on data from over 400,000 analysis results collected in the last month, here’s how many links each AI model cites per response – when it includes sources:

- Grok: 43.21 links per response

- ChatGPT: 25.08 links per response

- AI Mode: 13.78 links per response

- Perplexity: 10.00 links per response

- Gemini: 7.27 links per response

- Google AI Overviews: 7.24 links per response

- Copilot: 4.75 links per response

When AI models do include sources, they often cite many of them. Grok leads with over 43 links per response when it includes sources. ChatGPT averages 25 links per response when it cites sources, showing it’s not shy about including multiple references when it does provide them.

What Percentage of Responses Include Links?

Not every AI response includes links. Here’s what percentage of responses from each model include at least one source link:

- Perplexity: 98.67% of responses include links

- AI Mode: 91.99% of responses include links

- Copilot: 85.18% of responses include links

- Google AI Overviews: 75.92% of responses include links

- Grok: 70.17% of responses include links

- Gemini: 54.86% of responses include links

- ChatGPT: 35.00% of responses include links

This reveals an important pattern: Perplexity and AI Mode include links in almost every response, making them consistent citation opportunities. ChatGPT, despite citing many links when it does include them, only includes links in about one-third of its responses. This suggests ChatGPT is selective about when to provide sources.

What Do These Numbers Mean for Your Brand?

These citation patterns matter because they show where your content has the best chance of appearing. If a model cites many links, there are more opportunities for your website to be included. But it also means more competition for those spots.

For example, when Grok includes sources, it averages over 43 links per response, creating many citation opportunities. However, with so many links, each individual link gets less attention. ChatGPT’s pattern is different: when it does include links, it averages 25 per response, but it only includes links in about 35% of responses, making those citations more selective and potentially more valuable.

According to research from Search Engine Journal, citation patterns in AI responses directly impact website traffic. Brands that appear in AI citations often see increased organic traffic from users clicking through to learn more.

Why Do Some Models Cite More Links Than Others?

Different AI models have different approaches to providing sources. Some models are designed to show many sources to give users multiple perspectives. Others focus on fewer, higher-quality sources.

Grok’s high citation count (43+ links when sources are included) likely comes from its design to show diverse viewpoints. The platform aims to present many sources so users can explore different angles on a topic. This aligns with research from TechCrunch showing that Grok emphasizes source diversity in responses.

Perplexity takes a different approach: it includes links in nearly 99% of responses, but averages 10 links per response when it does. This makes Perplexity the most consistent citation opportunity. ChatGPT shows a more selective pattern: when it includes links, it averages 25 per response, but it only includes links in about 35% of responses overall. This matches findings from Nature that show ChatGPT prioritizes quality and relevance when deciding whether to include sources.

How Can You Optimize Content for Each Model?

Understanding citation patterns helps you tailor your content strategy. Here’s how to approach each model:

For High-Citation Models (Grok, ChatGPT, AI Mode, Perplexity)

These models cite many sources when they include links, so there are more opportunities to get included. Focus on:

- Creating diverse content: These models look for multiple perspectives, so cover different angles of your topic

- Building authority: Even with many citations, these models still prefer authoritative sources

- Optimizing for specific queries: With more citation spots, you can target niche topics where you have expertise

For Consistent Citation Models (Perplexity, AI Mode, Google AI Overviews)

These models include links in most of their responses (75-99%), making them reliable citation opportunities. Focus on:

- Creating comprehensive content: These models prefer in-depth, well-researched pages

- Establishing expertise: Show clear author credentials and original research

- Optimizing technical signals: Ensure your site has proper schema markup and clear structure, as noted in Google’s structured data guidelines

For Low-Citation Models (Gemini, Copilot)

These models cite fewer sources, making each citation more valuable. Focus on:

- Becoming the definitive source: Create content that’s clearly the best resource on a topic

- Demonstrating expertise: Show why your content is more authoritative than competitors

- Targeting high-value queries: Since citations are rare, focus on topics where being cited has the most impact

What Does This Mean for Your SEO Strategy?

Traditional SEO focuses on ranking in search results. AI SEO (also called GEO – Generative Engine Optimization) focuses on getting cited in AI responses. These citation patterns show that AI SEO requires a different approach than traditional SEO.

As Search Engine Journal explains, AI models don’t rank pages the same way search engines do. Instead, they select sources based on relevance, authority, and how well content answers specific questions.

The citation data shows interesting patterns: models like Perplexity and AI Mode include links in almost every response, making them consistent opportunities. Grok includes links in 70% of responses but averages 43 links when it does, creating many citation spots. ChatGPT is more selective, including links in only 35% of responses, but when it does, it averages 25 links, suggesting those citations are carefully chosen.

Key Takeaways

Understanding citation patterns helps you make smarter decisions about where to focus your content efforts:

- Grok cites the most sources (43+ per response when links are present), offering many opportunities but more competition

- ChatGPT cites heavily when it includes sources (25 per response), but only includes links in 35% of responses, making those citations more selective

- Perplexity and AI Mode include links in most responses (99% and 92% respectively), making them the most consistent citation opportunities

- Gemini and Copilot cite fewer sources (7 and 5 per response when links are present), making each citation more valuable

- Content strategy should vary by model based on both citation frequency and average links per response

By tracking which models cite your content and how often, you can optimize your strategy for maximum visibility across AI platforms.

Frequently Asked Questions

How many links does ChatGPT cite per response?

Based on data from the last month, when ChatGPT includes links, it averages 25.08 links per response. However, ChatGPT only includes links in about 35% of its responses, making it more selective than models like Perplexity that include links in nearly every response. When ChatGPT does cite sources, it includes many of them, suggesting careful selection of multiple authoritative references.

Which AI model cites the most sources?

Grok cites the most sources when it includes links, averaging 43.21 links per response. This is significantly higher than other models. Grok is designed to show diverse perspectives, which explains why it includes so many source links. However, it’s worth noting that Perplexity includes links in 98.67% of responses (compared to Grok’s 70.17%), making Perplexity the most consistent citation opportunity overall.

How can I get my website cited by AI models?

To get cited by AI models, create high-quality, authoritative content that directly answers common questions. Use clear headings, proper schema markup, and demonstrate expertise. Focus on topics where your brand has unique insights. Track which models cite your content to understand what’s working.

Do more citations mean more traffic?

Not necessarily. Models that cite many sources (like Grok) may drive less traffic per citation because users have more options. Models that cite fewer sources (like ChatGPT) may drive more traffic per citation because each link gets more attention. The value depends on both the number of citations and how users interact with them.

How often do AI models update their citations?

AI models can update their citations frequently as they crawl and index new content. However, the exact frequency varies by model. Some models may update weekly, while others may update more or less frequently. Regular content updates and monitoring help ensure your content stays relevant.

Should I optimize for all AI models or focus on specific ones?

It depends on your goals and audience. If you want maximum visibility, optimize for models with high citation rates like Grok. If you want high-value citations, focus on selective models like ChatGPT. Many brands use a balanced approach, creating content that works well across multiple models while tracking which ones drive the most traffic.

This post was written by Spotlight’s content generator.